Pods

Manage Pods

Learn how to create, start, stop, and terminate Pods using the Runpod console or CLI.

Before you begin

If you want to manage Pods using the Runpod CLI, you’ll need to install Runpod CLI, and set your API key in the configuration. Run the following command, replacing[RUNPOD_API_KEY] with your API key:

Deploy a Pod

To create a Pod using the Runpod console:CPU configuration:

- Open the Pods page in the Runpod console and click the Deploy button.

- (Optional) Specify a network volume if you need to share data between multiple Pods, or to save data for later use.

- Select GPU or CPU using the buttons in the top-left corner of the window, and follow the configuration steps below.

- Select a graphics card (e.g., A40, RTX 4090, H100 SXM).

- Give your Pod a name using the Pod Name field.

- (Optional) Choose a Pod Template such as Runpod Pytorch 2.1 or Runpod Stable Diffusion.

- Specify your GPU count if you need multiple GPUs.

- Click Deploy On-Demand to deploy and start your Pod.

CUDA Version CompatibilityWhen using templates (especially community templates like  Note: Check the template name or documentation for CUDA requirements. When in doubt, select the latest CUDA version as newer drivers are backward compatible.

Note: Check the template name or documentation for CUDA requirements. When in doubt, select the latest CUDA version as newer drivers are backward compatible.

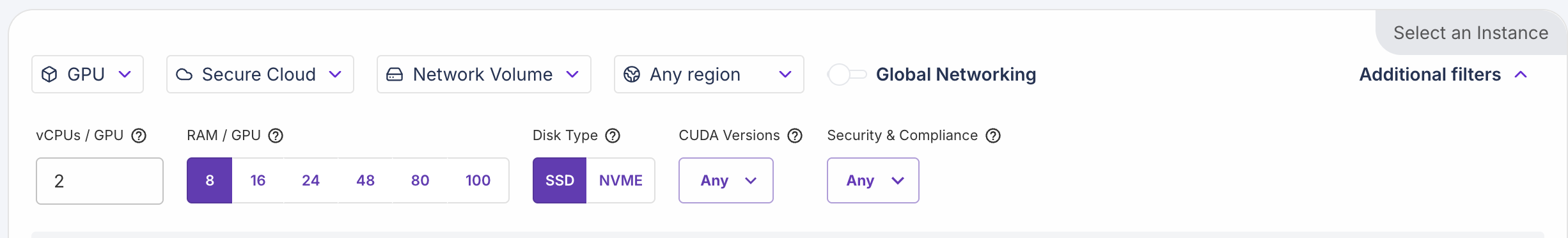

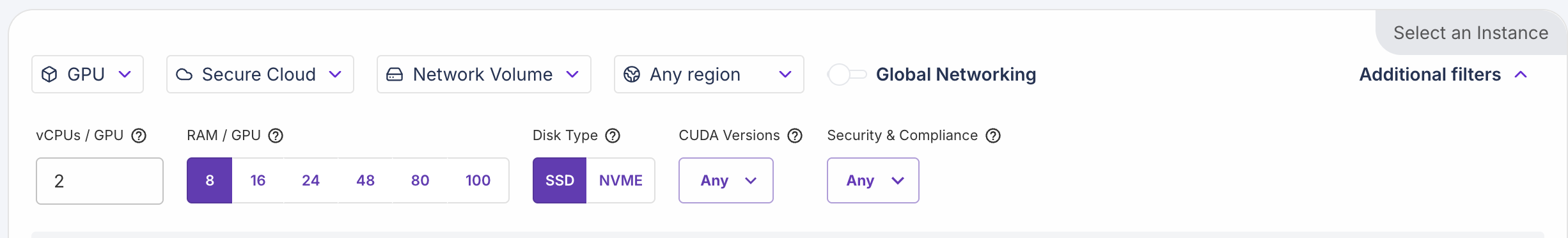

runpod/pytorch:2.8.0-py3.11-cuda12.8.1-cudnn-devel-ubuntu22.04), ensure the host machine’s CUDA driver version matches or exceeds the template’s requirements.If you encounter errors like “OCI runtime create failed” or “unsatisfied condition: cuda>=X.X”, you need to filter for compatible machines:- Click Additional filters in the Pod creation interface

- Click CUDA Versions filter dropdown

- Select a CUDA version that matches or exceeds your template’s requirements (e.g., if the template requires CUDA 12.8, select 12.8 or higher)

- Select a CPU type (e.g., CPU3/CPU5, Compute Optimized, General Purpose, Memory-Optimized).

- Specify the number of CPUs and quantity of RAM for your Pod by selecting an Instance Configuration.

- Give your Pod a name using the Pod Name field.

- Click Deploy On-Demand to deploy and start your Pod.

Custom templates

Runpod supports custom Pod templates that let you define your environment using a Dockerfile. With custom templates, you can:- Install specific dependencies and packages.

- Configure your development environment.

- Create portable Docker images that work consistently across deployments.

- Share environments with team members for collaborative work.

Stop a Pod

If your Pod has a network volume attached, it cannot be stopped, only terminated. When you terminate the Pod, data in the

/workspace directory will be preserved in the network volume, and you can regain access by deploying a new Pod with the same network volume attached./workspace directory is preserved. To learn more about how Pod storage works, see Storage overview.

By stopping a Pod you are effectively releasing the GPU on the machine, and you may be reallocated 0 GPUs when you start the Pod again. For more info, see the FAQ.

After a Pod is stopped, you will still be charged for its disk volume storage. If you don’t need to retain your Pod environment, you should terminate it completely.

To stop a Pod:

- Open the Pods page.

- Find the Pod you want to stop and expand it.

- Click the Stop button (square icon).

- Confirm by clicking the Stop Pod button.

Stop a Pod after a period of time

You can also stop a Pod after a specified period of time. The examples below show how to use the CLI or web terminal to schedule a Pod to stop after 2 hours of runtime.Use the following command to stop a Pod after 2 hours:This command uses sleep to wait for 2 hours before executing the

runpodctl stop pod command to stop the Pod. The & at the end runs the command in the background, allowing you to continue using the SSH session.Start a Pod

Pods start as soon as they are created, but you can resume a Pod that has been stopped.To start a Pod:

- Open the Pods page.

- Find the Pod you want to start and expand it.

- Click the Start button (play icon).

Terminate a Pod

Terminating a Pod permanently deletes all associated data that isn’t stored in a network volume. Be sure to export or download any data that you’ll need to access again.

To terminate a Pod:

- Open the Pods page.

- Find the Pod you want to terminate and expand it.

- Stop the Pod if it’s running.

- Click the Terminate button (trash icon).

- Confirm by clicking the Yes button.

List Pods

You can find a list of all your Pods on the Pods page of the web interface. If you’re using the CLI, use the following command to list your Pods:Access logs

Pods provide two types of logs to help you monitor and troubleshoot your workloads:- Container logs capture all output sent to your console standard output, including application logs and print statements.

- System logs provide detailed information about your Pod’s lifecycle, such as container creation, image download, extraction, startup, and shutdown events.